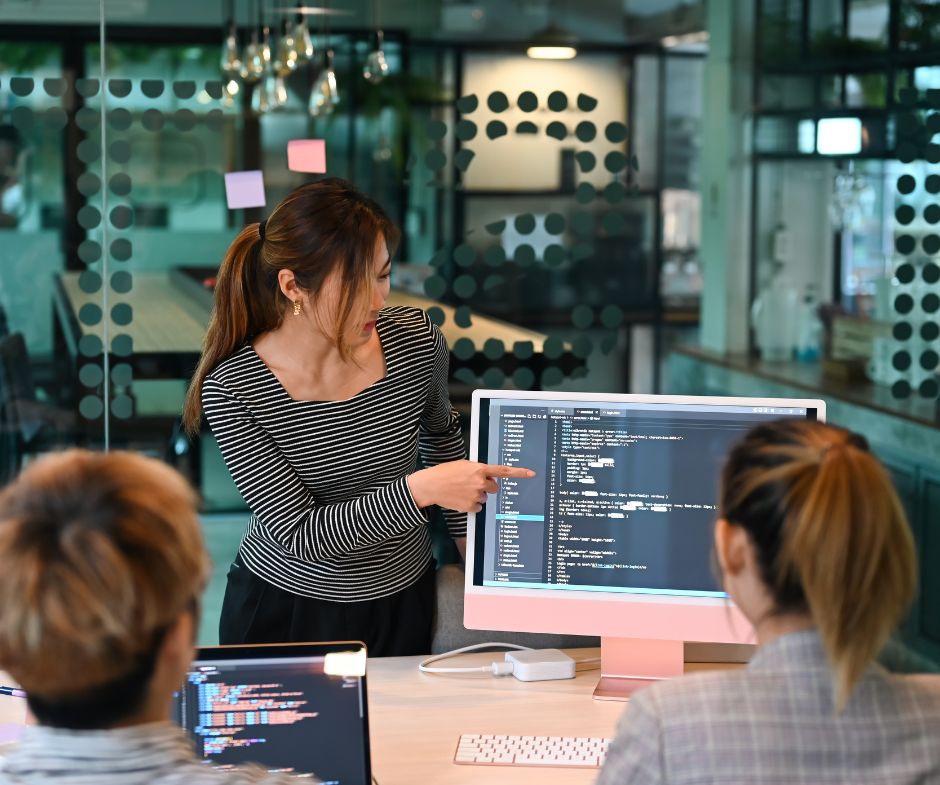

Everything works perfectly on your laptop. All tests pass with green checkmarks. You push to production feeling confident. Then your boss opens the app and everything explodes.

The payment system freezes. Users can’t log in. The dashboard shows random errors. And somehow, none of this happened in testing.

Backend developers spend hours trying to replicate bugs that vanish when you look directly at them. You scroll through endless logs searching for clues. You refresh production hoping the problem magically fixed itself. It didn’t.

The pressure mounts fast. Managers want answers now. Users flood support with complaints. Everyone expects you to fix it immediately, but you can’t even figure out what’s broken.

This post shows how to build a backend that reveals issues early and makes debugging dramatically easier. No more guessing games. No more three-hour log-diving sessions at midnight.

Why Debugging Production Issues Is So Hard

Understanding why production debugging feels impossible helps you fix the underlying problems. Several factors conspire to hide bugs from developers.

Environment Drift

Local development, staging, and production often differ in configuration, environment variables, and database states. Your laptop has clean data. Production has millions of messy records from real users.

What works in dev collapses in prod because production is a different planet. Different database versions. Different memory limits. Different network conditions. Your code encounters situations that never existed in testing.

Insufficient or Noisy Logging

Either no logs exist, or logs contain everything except the useful information you need.

Underlogging means critical operations happen silently. Payment processing completes without recording success or failure. You have no idea what happened.

Overlogging causes noise. Thousands of “debug: checking user permissions” messages bury the one error that matters. Finding the needle in the log haystack takes forever.

Race Conditions and Concurrency Issues

Problems only appear when many users hit the system simultaneously. Two users click the same button at exactly the same moment. Database locks conflict. Transactions fail.

Developers can’t reproduce these issues on laptops. Running one test user at a time never triggers the race condition. The bug only exists when production traffic reaches critical mass.

Hidden Timeouts or Network Failures

Third-party APIs or microservices fail silently in ways your error handling doesn’t catch. The payment gateway times out after 30 seconds. Your code waits indefinitely because nobody set a timeout limit.

Backend systems retry endlessly and mask the root cause. Logs show “retrying request” fifty times but never explain why the original request failed.

Poor Error Boundaries

One tiny error in a controller breaks an entire request chain. An undefined variable crashes the whole endpoint. Users see generic 500 errors with zero context about what went wrong.

Real-world examples make this concrete:

- A payment API failing only during peak hours when connection pools exhaust

- Cache returning stale values because invalidation logic has a bug

- Background jobs failing silently with no alerts or notifications

The Foundation: Observability Over Guesswork

Observability means your system tells you what’s happening instead of making you guess. Build visibility into every layer from day one.

The three pillars of observability work together to reveal system behavior.

Logging

Structured logs using JSON format instead of random text messages. Logs should include context: user ID, endpoint name, request ID, timestamp, and service name.

Use log levels consistently:

- Info: normal operations

- Warning: potential problems

- Error: failures that need attention

- Critical: emergencies requiring immediate action

Structured logs let you search and filter efficiently. Finding all errors for user #12345 takes seconds instead of hours.

Metrics

Simple counters that track failures, latency, and throughput. Metrics answer questions instantly.

Examples:

- How many login failures happened in the last hour?

- What’s the average API response time right now?

- How many database queries are running?

Metrics show patterns. Sudden spikes indicate problems. Gradual increases warn about growing issues before they become critical.

Tracing

Enables following a single request across multiple microservices. When a request touches five different services, tracing shows the complete journey.

Useful for microservice or distributed architectures where one user action triggers a cascade of backend operations. Tracing reveals which service caused delays or failures.

Observability beats blind debugging every single time. Guessing wastes hours. Visibility provides answers immediately.

Logging That Makes Debugging Ten Times Easier

Good logging transforms impossible debugging sessions into straightforward investigations. Bad logging guarantees frustration.

Structured Logging

Structure means consistency. Every log entry follows the same format with the same fields. Machines can parse it. Developers can search it. Tools can analyze it automatically.

Compare these logs:

Bad log: "Error while saving"

Good log:

json

{

"service": "payments",

"event": "db_save_failed",

"orderId": 321,

"userId": 456,

"error": "unique_constraint_violation",

"timestamp": "2025-11-16T10:30:45Z"

}The good log provides everything needed to debug. Which service? Payments. What operation? Database save. Which order? #321. What failed? Unique constraint. When? Exact timestamp.

Correlation IDs

Each request gets a unique ID that propagates through the entire system. Every log entry includes this ID.

A user reports “checkout failed.” You search logs for their correlation ID. Instantly see:

- Request entered at API gateway

- Validated user session

- Called inventory service

- Inventory service returned “out of stock”

- Showed error to user

The complete story appears without guessing. No scrolling through unrelated logs. Just the exact sequence of events for that one request.

Tools for Implementation

Node.js developers use:

- Winston: Flexible logging library with transport options

- Pino: Extremely fast structured logger

Centralized log management:

- Datadog: Collects logs from all servers in one searchable interface

- Logtail: Simple log aggregation for smaller teams

- Elastic Stack: Powerful search and analysis for large systems

These tools collect logs from every server, make them searchable, and provide dashboards showing patterns.

Monitoring That Alerts You Before Users Do

Catching problems before customers notice them prevents support floods and maintains trust. Good monitoring watches your backend constantly.

Common Alerts Every Backend Needs

Set up automatic alerts for critical thresholds:

API response time spikes: Alert when average response time exceeds 2 seconds. Slow responses indicate problems brewing.

Error rate crossing threshold: Alert when error percentage exceeds 1% of requests. One error is normal. Hundreds means something broke.

Database latency increasing: Alert when queries take longer than usual. Database slowdowns cause cascading failures.

Queue backlogs: Alert when background job queues grow unexpectedly. Backups indicate processing can’t keep up with incoming work.

Visibility Dashboards

Build dashboards showing real-time system health:

- Current API latency by endpoint

- Database query performance

- Active background jobs and their status

- Error counts by service

- CPU and memory usage across servers

Dashboards let you spot trends. Gradual memory increases warn about memory leaks before they crash servers.

Monitoring Tools

Backend developers commonly use:

- Prometheus: Collects metrics from your application

- Grafana: Creates beautiful dashboards from Prometheus data

- New Relic: All-in-one monitoring and alerting

- Datadog: Comprehensive monitoring across services

These tools send alerts to Slack, email, or phone when thresholds breach. No more discovering problems hours after they started.

Tracing: The Secret Weapon for Complex Apps

Tracing is like giving every request a travel diary. It records everywhere the request went and how long each stop took.

How Tracing Works

Each request gets a unique trace ID. Every service that touches the request adds information to the trace:

- Request enters API gateway (5ms)

- Authenticates user (12ms)

- Calls product service (45ms)

- Product service queries database (120ms)

- Calls inventory service (200ms)

- Returns response (8ms)

Total time: 390ms, with inventory service taking the longest. Now you know exactly where optimization efforts should focus.

Span and Trace ID

A trace represents one complete request journey. A span represents one operation within that trace. Services pass trace IDs to downstream services so everything connects.

Where Tracing Shines

Tracing solves debugging nightmares in:

Microservices: When requests bounce through ten different services, tracing shows the complete path and identifies bottlenecks.

Serverless functions: Functions start and stop quickly. Tracing connects them despite their ephemeral nature.

Multi-database systems: When one request touches three different databases, tracing shows which database caused delays.

Tracing Tools

Industry standard options:

- OpenTelemetry: Open standard for tracing across any platform

- Jaeger: Visualizes traces with timeline views

- Honeycomb: Advanced tracing with powerful query capabilities

These tools show request flows visually. Complex systems become understandable when you see exactly what happened.

Designing a Backend That Debugs Itself

Architecture choices determine whether debugging feels easy or impossible. Build defensively from the start.

Add Error Boundaries Around Every External Call

Every API call, database query, and queue operation should have clear fallback behavior. Never let one failure crash the entire system.

Wrap external calls in try-catch blocks. Define what happens when they fail. Return cached data? Show partial results? Retry? Decide explicitly instead of letting errors bubble randomly.

Use Circuit Breakers

Circuit breakers prevent one failing service from dragging others down. When a service fails repeatedly, the circuit breaker stops calling it temporarily.

This gives failing services time to recover. It prevents cascading failures where one broken service overwhelms everything else with retries.

Implement Retries with Exponential Backoff

Don’t hammer failing APIs with instant retries. Wait a bit. If it fails again, wait longer. Exponential backoff prevents overwhelming already-struggling services.

First retry: wait 1 second Second retry: wait 2 seconds Third retry: wait 4 seconds

Give systems breathing room to recover.

Centralize Error Handling

Create one place that manages how errors are logged, displayed to users, and categorized. Consistent error handling across your entire backend.

Every controller uses the same error handler. Every service follows the same patterns. Debugging becomes predictable because errors always appear the same way.

Use Validation at Every Layer

Data entering controllers must be validated immediately. Don’t let garbage data propagate through your system.

Check types. Verify required fields exist. Validate formats. Reject bad data at the door instead of discovering problems deep in processing logic.

Graceful Degradation

When something fails, provide partial data instead of complete crashes. Recommendation engine down? Show products without recommendations. Reviews service slow? Display products and load reviews asynchronously.

Users prefer partial functionality over blank error pages.

Tools That Make Debugging Production a Breeze

The right tools transform debugging from archaeology to engineering.

For Logs

- Pino: Extremely fast JSON logger for Node.js

- Winston: Feature-rich logging with multiple transports

- ELK Stack: Elasticsearch, Logstash, Kibana for log aggregation

- Datadog Logs: Centralized logging with powerful search

For Monitoring

- Prometheus: Metric collection and storage

- Grafana: Dashboard and visualization

- New Relic: Full-stack monitoring and alerting

For Tracing

- OpenTelemetry: Vendor-neutral tracing standard

- Jaeger: Distributed tracing visualization

For Live Debugging

- Sentry: Tracks real-time exceptions with context and stack traces

- Postmortem tools: Helps analyze incidents after they occur

Real-World Example

Sentry catches an exception: “TypeError: Cannot read property ’email’ of undefined.”

Instead of just the error message, Sentry shows:

- Which user encountered it

- What they were doing

- Complete request parameters

- Browser and device information

- Stack trace showing exactly which line failed

- How many times it happened

One Sentry alert provides everything needed to fix the bug. No log diving required.

A Practical Debugging Workflow That Developers Can Follow

Turn chaos into structured investigation with a repeatable process.

Step 1: Identify the Pattern

Look for commonalities. Does the error affect:

- Specific endpoint?

- Particular time window?

- Certain user types?

- Specific features?

Patterns narrow the problem space dramatically.

Step 2: Pull Logs with Correlation ID

If you have a correlation ID from an affected request, search logs for that ID. See the complete request journey instantly.

No correlation ID? Search by pattern: timestamp range, endpoint, user ID, or error message.

Step 3: Check Metrics for Spikes

View dashboards during the problem period. Look for:

- Response time spikes

- Error rate increases

- Database latency jumps

- Memory or CPU spikes

Metrics show what changed when the problem started.

Step 4: Trace the Request Path

Use tracing tools to see the complete request flow. Identify which service caused delays or failures.

Slow traces reveal bottlenecks. Failed traces show error locations.

Step 5: Reproduce in Staging with Similar Load

Try to reproduce using staging environment. Match production conditions as closely as possible:

- Similar data volumes

- Comparable traffic levels

- Same configuration

Reproduction lets you test fixes safely.

Step 6: Patch, Deploy, Monitor

Apply the fix. Deploy to production. Monitor closely for:

- Error rate returning to normal

- Performance metrics improving

- No new problems appearing

Watch for regression. Sometimes fixes create new issues.

This workflow transforms debugging from random exploration to systematic investigation.

Build a Backend That Tells You What’s Wrong Before You Have to Ask

Production debugging becomes easy when backends are built with visibility and self-diagnostics from the start.

Implement structured logging that provides context. Add monitoring that alerts before users complain. Use tracing to understand complex request flows. Design defensive architecture that contains failures gracefully.

These investments pay back immediately. The first production bug that takes 10 minutes to debug instead of 3 hours proves the value. The alert that warns about problems at 2 PM instead of discovery at 2 AM saves your sleep schedule.

Start with basics: add structured logging to critical paths. Set up one alert for error rates. Implement correlation IDs for request tracking. Each improvement makes the next debugging session easier.

With the right setup, the next production bug won’t require caffeine, tears, or bargaining with higher powers. Your backend will tell you exactly what went wrong, where it happened, and why. Debugging transforms from nightmare to routine investigation.

Build systems that explain themselves. Your future self will thank you.